Since the launch of AMD's Radeon R9 290, its initial family of video cards has gained a reputation for running hot and loud. It wasn't unexpected for them to reach operating temperatures of up to 95 degrees Celsius when under load. AMD has stated this was a design decision to convert power consumption into optimal performance, but nevertheless it's a balmy temperature if your case isn't adequately ventilated.

For those that want less heat and noise, there is an option. Sapphire claims their factory overclocked R9 290 is 15% cooler and 40% quieter than the reference model thanks to its triple-fan design. AMD supplied a review sample, and I put it through its paces to see if Sapphire's words rang true.

Competition in this market has seemingly never been fiercer, as well. The worth of a video card is no longer just measured in clock rates, but also in their supporting technologies. Consumers can now consider different graphic rendering APIs to monitor-focused solutions for the bugaboo called screen tearing that we've become all too familiar with. I was able to speak with AMD about said technologies, and we'll cover that discussion below, but first let's take a look inside the Sapphire Tri-X OC R9 290's box.

The Sapphire Tri-X OC R9 290's packaging is coated in a slightly reflective sheen, the usual imposing figure on its front, and lists its general features across both front and back. Some of these include 4GB of 512-bit GDDR5 memory, 4K gaming support via HDMI and DisplayPort 1.2, Graphics Core Next (GCN) architecture, multi-display capability with Eyefinity, PowerTune monitoring and more.

Inside its smaller box is a quick installation guide, product registration information, driver CD, sticker, molex to six and eight-pin PCIe cables, and an HDMI cable.

The card itself is fairly large, partly in thanks to an oversized cooler that extends about 1.8" beyond the circuit board. In total it measures 12.01" in length and 4.45" in width. It just cleared my mid-sized tower and left about 2.4" of breathing room with all of the hard drive cages installed. There is no backplate, so an extra bit of care is advised when handling it.

The I/O plate has one DisplayPort 1.2, one HDMI, and two Dual-Link DVI-D inputs.

The reference R9 290 shipped with a 947 MHz core clock speed. Sapphire bumped that up to 1000 MHz for their Tri-X OC model. Additionally, its 4GB of memory was boosted from 5000 MHz to 5200 MHz. That should help with playing games at high resolutions.

The Sapphire Tri-X OC R9 290 was tested inside a Corsair 550D case that housed an ASUS P8Z77-V motherboard, Intel i7-3770K processor, 8GB of Corsair Vengeance memory, and powered by an XFX Pro 850W Black Edition PSU. The Corsair 550D is built for silence, meaning it's lined with noise-isolating foam, but its cooling capabilities are no different than most other mid-ranged towers. Comparisons were done with a factory overclocked MSI Lightning GeForce GTX 680.

Benchmarks were repeated at least three times to get the most accurate measurements in different locations and situations and then averaged. Fraps was used as the primary tool. If a game had its own benchmarking option, it was used in addition to those captured with Fraps.

3DMark and the sample of games below were run at a 1920 x 1080 resolution. Later this month I may have a 3840 x 2160 display on hand, and I'll take new benchmarks at that time.

3DMark's Fire Strike benchmark is a demanding set of tests for high-performance setups. It features advanced lighting, particle effects, and highly detailed environments and character models. It pushes even the very latest video cards hard.

MSI Lightning GeForce GTX 680

3DMark Fire Strike Score: 7003

Graphics Score: 7925

Physics Score: 10359

Combined Score: 2971

Sapphire Tri-X OC R9 290

Score: 8983

Graphics Score: 10407

Physics Score: 10090

Combined Score: 4101

The GTX 680 compared better than 78% of other results. But the R9 290 compared better than 89% of other results, coming right up to the coattails of 3DMark Fire Strike's reference high-end system (NVIDIA GeForce GTX Titan and Intel Core i7-4770K) score of 9131.

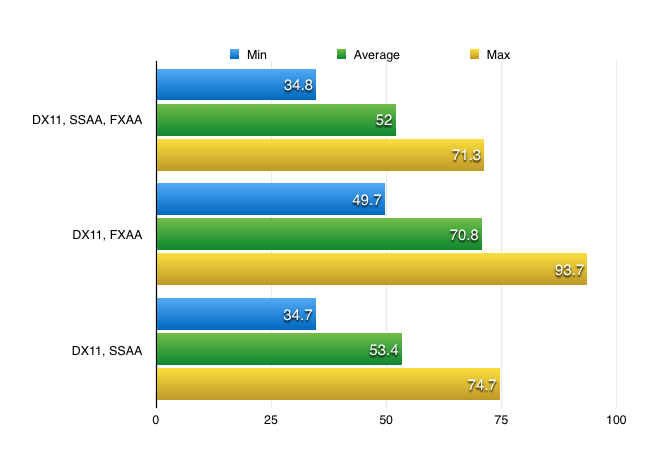

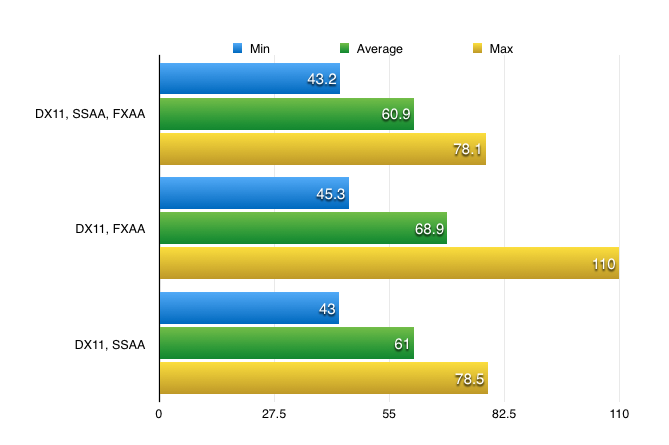

Settings: DirectX 11, Mantle, Texture Quality (Very High), Shadow Quality (Very High), DOF (High), Texture Filtering Quality (8x Anisotropic), SSAA (High/Off), Automatically Limit Texture Quality (Default), Screenspace Reflection, Parallax Occlusion Mapping, FXAA (On/Off), Contact Hardening Shadows, Tessellation, Image-based Reflection

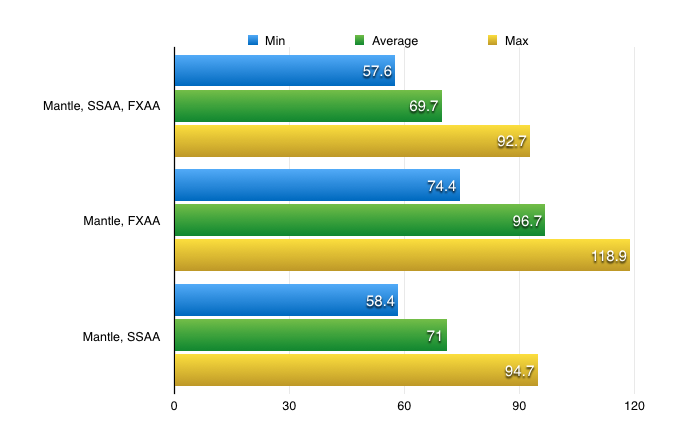

Thief is just one of the latest games to use AMD's Mantle, a low-level graphics rendering API that allows developers to speak more directly to the GPU rather than having to go through and be bottlenecked by the CPU. The freed resources should enable for smoother experience.

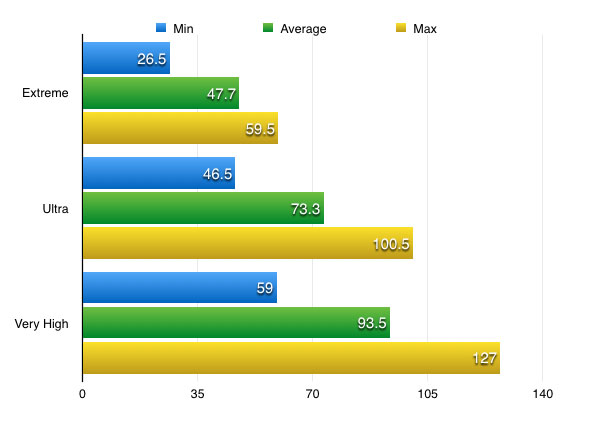

MSI Lightning GeForce GTX 680

Sapphire Tri-X OC R9 290

Mantle

Both were able to run Thief with all the bells and whistles without dipping below 30 frames per second, but enabling Mantle really showed the differences between cards and APIs. In fact, I managed to get almost 30 frames over DirectX 11 for the average by disabling the more expensive supersample anti-aliasing. If you want to make the most out of a 120 Hz or higher display, Mantle is definitely a boon.

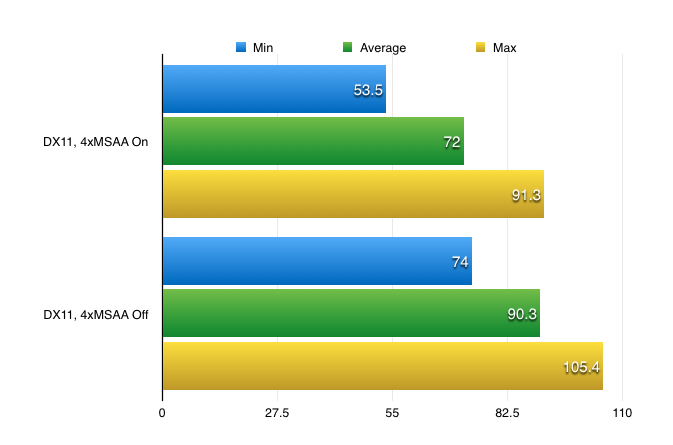

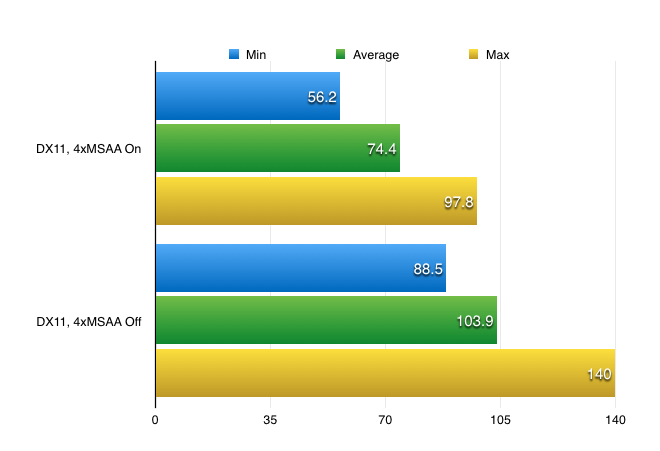

Settings: DirectX 11, Mantle, Texture Quality (Ultra), Texture Filtering (Ultra), Lighting Quality (Ultra), Effects Quality (Ultra), Post Process Quality (Ultra), Terrain Quality (Ultra), Terrain Decoration (Ultra), AA Deferred (4x MSAA On/Off), AA Post (High), Ambient Occlusion (HBAO)

Battlefield 4 is another Mantle-equipped game, though sadly Fraps was unable to capture readings for it. The results were similar to Thief's Mantle demonstrations, however, climbing at least 10 to 15 frames higher than DirectX 11.

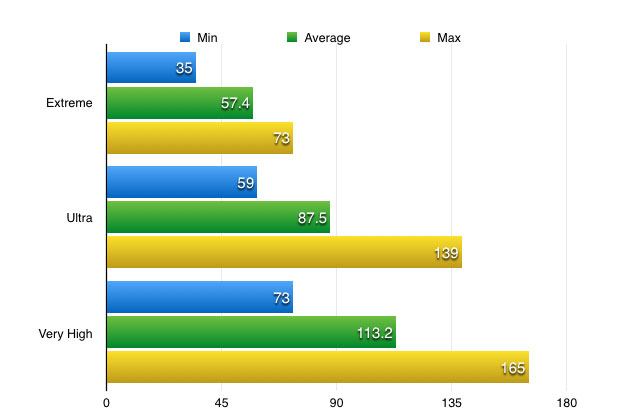

MSI Lightning GeForce GTX 680

Sapphire Tri-X OC R9 290

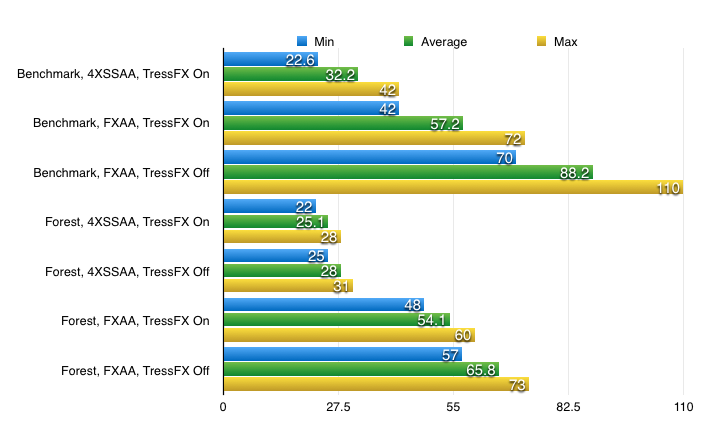

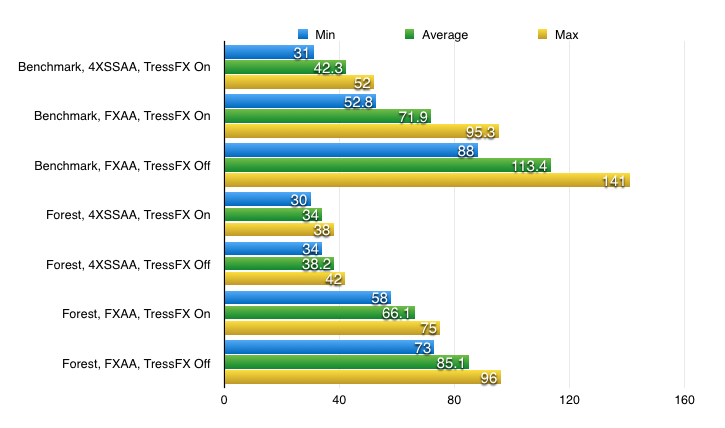

Settings : Texture Quality (Ultra), Texture Filter (Anisotropic 16x), FXAA, Shadows (Ultra), Shadow Resolution (Ultra), Level of Detail (Ultra), Post Processing, High Precision, Tessellation, Hair Quality (TressFX On/Off), Reflections (High), DOF (Ultra), SSAO (Ultra)

MSI Lightning GeForce GTX 680

Sapphire Tri-X OC R9 290

AMD's TressFX Hair physics system and supersample anti-aliasing look great together, but are quite costly in terms of performance. Even so, the Sapphire Tri-X OC R9 290 was able enable both while staying above 30 frames per second. The GeForce GTX 680 couldn't keep up.

Settings: Extreme, Ultra and Very High presets

MSI Lightning GeForce GTX 680

Sapphire Tri-X OC R9 290

Sapphire's claim of a quieter, cooler card were honest. It idled at 20% fan speed, going up to 37% to 41% under heavy load. At either spectrum it was whisper quiet. The most audible noise came only from the case fans and power supply. If you have an efficient, high wattage PSU, you're not going to hear a thing over the sound of your games.

Temperatures hovered around 36C when idling. Under load, Thief pushed it up to 74C. I haven't seen it go higher. Those are great numbers compared to the reference design's 90-95C, doubly so when taking into account the more closed, air-cooled nature of my Corsair 550D tower.

Last week I spent an hour on the phone with both an AMD Gaming Scientist and Product Manager. I'd like to first thank the both of them, Richard and Victor respectively, for taking the time to speak with me. We talked at length about the technologies and value of the R9 200 series, as well as their thoughts on similar products from the competition and where AMD goes from here. Not surprisingly, our conversation began with Mantle.

Mantle is AMD's alternative to Khronos Group's OpenGL and Microsoft's DirectX, the application programming interfaces (or APIs) for graphics rendering that we've been using for more than two decades. OpenGL launched in January of 1992 with the first release of DirectX following in September of 1995. They're entrenched beasts in the industry, but that doesn't mean they're particularly efficient.

According to AMD, 25% to 40% of the time an application is bottlenecked by a single CPU core in OpenGL and DirectX games. Developers are having to program around those API limitations to keep throughput high. In short, games are spending too much time talking to and waiting on the CPU instead of going directly to the GPU. That hinders performance.

Mantle's goal is thus to get the CPU out of the way, allowing developers "closer-to-the-metal" access to the GPU. The results are impressive. During those bottlenecked situations, I saw an improvement of 10 to 30 frames over running the same content with DirectX 11. And this is only the beginning. Despite Mantle's beta status, there are currently over 20 supported games launched - Sniper Elite III just released a patch adopting Mantle - or in development. Close to 100 developers are taking part in the beta program, roughly 80 of which are part of the gaming industry, and AMD is planning to finalize Mantle and publish its SDK by the end of the year.

Microsoft is going to offer similar efficiency with the upcoming DirectX 12, but AMD isn't worried. In fact, they made a very deliberate choice to share material with their competitors. "We want the ecosystem to move forward," Richard said, and that DirectX 12 is "not competition as far as AMD is concerned."

They do believe their own API has some distinct advantages, however. The first example of which is that it's tuned for the Graphics Core Next (GCN) architecture that exists in the PlayStation 4, Xbox One and PC platforms. That should allow for a high level of portability between them. Additionally, Mantle may have greater reach if Microsoft continues the trend of releasing their APIs exclusively for new versions of Windows. A majority of users are still running Windows 7, and it will shock no one if DirectX 12 will only be available for the recently announced Windows 10. Meanwhile, AMD is hinting at Linux support for Mantle and demands no licensing fee for its use.

That initiative to move things forward seems to have shaped the development of FreeSync, as well, their answer to the visual artifact known as screen tearing that has plagued gaming for years. In short, screen tearing occurs when the output of the video card is not in sync with the refresh rate of the monitor. The well-worn solution has been to enable vertical synchronization, a rendering option that locks the former to the latter, but that can introduce stuttering and input lag. You have to choose the devil you can live with. Both tend to nibble distractingly on your eyes.

AMD's FreeSync and NVIDIA's G-SYNC both solve those issues by synchronizing the monitor's refresh rate to what the video card is doing rather than the other way around. But while the end results are similar and quite impressive, their approaches differ from technical and business standpoints.

G-SYNC requires a physical module to be attached the monitor itself. That proprietary device, supplied by NVIDIA, does most of the work, but it also increases the bill of materials. For AMD's part, they opted to go directly to the Video Electronics Standards Association (VESA) and appealed for adaptive-sync to become standard in all DisplayPort 1.2a links. VESA accepted the proposal. This solution requires no extra hardware. No licensing fee. No extra cost. It even has a larger frequency range, anywhere from 9 Hz to 240 Hz, all without screen tearing or input lag. And better still, there's nothing to restrict vendors from supporting both technologies.

Of course, you can't actually buy a FreeSync monitor today. AMD's Product Manager expects them to arrive by the latest in January of next year, although one partner is ready to launch this December. Hopefully we'll hear more about them soon and with estimated costs $100 to $200 less than the current G-SYNC displays.

4K gaming was the next topic, but most users still run games at their maximum resolution of 1080p even if their hardware is capable of pushing it further. To get around that limitation, a method called downsampling or ordered grid supersampling anti-aliasing (OGSSAA) has become somewhat popular in various circles. It essentially renders the game at a higher resolution then downscales it to your monitor's native resolution for a cleaner, sharper image. A Twitter campaign has appeared asking AMD for official support for OGSSAA, and I asked if there were any plans to adopt it in future updates. There's nothing definitive just yet, but he did say their driver team is looking into it and that the GCN architecture would be well suited to the task.

Finally, I asked when we might be seeing their answer to NVIDIA's 900 series. Were the leaks about a hybrid air and water GPU cooler for an AMD Radeon R9 390X accurate? That got a bit of a chuckle. The photo in question did not come from them. And the R9 200 series is here to stay for the holiday. However, they will have a few announcements about positioning coming up.

The Sapphire Tri-X OC R9 290 does pretty much everything it set out to do wonderfully. It's quieter and cooler than the reference design, never operating at more than a whisper. AMD's Mantle API proved itself, as well, posting some great numbers during my benchmarks. There's no question that's it a very solid card, and I'm looking forward to seeing more about Mantle and FreeSync in the months ahead.

At $380, it sits above the recently launched NVIDIA GeForce GTX 970. NVIDIA's new line is priced and performing aggressively. As a counter, AMD's Never Settle promotion offers three free games to choose from out of a wide selection - Alien: Isolation and Star Citizen being recent additions - with the purchase of an R9 series graphics card from participating vendors. That is worth consideration if you don't already have those titles. And with Star Citizen also supporting Mantle, I've no doubt AMD will be a great place to play it.

10 Top Tech Gifts for Globetrotters and World Travelers

10 Top Tech Gifts for Globetrotters and World Travelers You Should Play Europa Universalis IV

You Should Play Europa Universalis IV Until Dawn (PS4) - All Collectible Locations guide

Until Dawn (PS4) - All Collectible Locations guide Ten Best Video Game TV Shows

Ten Best Video Game TV Shows Play as Han Solo in Star Wars Battlefront - Hero guide

Play as Han Solo in Star Wars Battlefront - Hero guide